Spellbinding Docker - The Art of Multi-Stage Builds

Ana-Ioana Vlad and Kamil Choroba · September 30, 2024 · 16 min read

In this article, we are about to embark on a two-part journey through the magical world of Docker. In the first part, we will follow a sorceress as she refines her Docker spells with multi-stage builds, learning how to transform an old, inefficient Dockerfile into a streamlined, powerful tool. In the second part, we’ll step into the real world to explore practical examples and applications of these magical techniques in production environments. By the end, you’ll be ready to wield the power of Docker multi-stage builds yourself.

1. Story Time: Spellbinding Docker

In this section, we’ll follow the sorceress as she confronts an outdated Dockerfile, plagued by inefficiency and lack of structure. Through her journey, we’ll explore the power of Docker multi-stage builds and how they can transform a cumbersome process into a sleek, organized solution. We’ll witness the evolution of her Dockerfile, uncovering how separating development, testing, and production into distinct stages improves performance, clarity, and maintainability. Let’s dive into the art of Docker refactoring and reveal the magic of multi-stage builds.

Intro - The Magical Docker Odyssey

Welcome to a tale of magic and mastery. In the realm of software, a sorceress embarks on a quest to transform ancient Docker spells into powerful, modern solutions. Join us as we unravel her journey, discovering the secrets of Docker multi-stage builds.

Chapter 1 - The Ancient Docker Spellbook

Our story begins with a challenge. The sorceress encounters the ancient Docker spellbook - an archaic, (almost) single-stage build. Cumbersome and inefficient, it hinders her magical prowess. Let’s explore how this old code held back her potential.

FROM 0123456789.dkr.ecr.eu-west-1.amazonaws.com/some-node-image AS builder

RUN curl -sqL https://dl.yarnpkg.com/rpm/yarn.repo | tee /etc/yum.repos.d/yarn.repo &&\

yum groupinstall -y "Development Tools" &&\

yum install -y yarn git &&\

yum clean all

WORKDIR /app

COPY package.json yarn.lock .npmrc ./

RUN yarn install --frozen-lockfile

COPY tsconfig.prod.json ./

COPY src ./src/

COPY assets ./assets/

RUN yarn build

# Add prod dependencies into dist bundle

RUN yarn install --frozen-lockfile --production --modules-folder ./dist/node_modules

FROM 0123456789.dkr.ecr.eu-west-1.amazonaws.com/some-node-image

RUN yum install -y https://github.com/krallin/tini/releases/download/v0.18.0/tini_0.18.0.rpm &&\

yum clean all

WORKDIR /app

ENV NODE_ENV=production

ENV PORT=3000

COPY --from=builder /app/dist ./

COPY --from=builder /app/src/fragments ./fragments

COPY --from=builder /app/assets ./assets

CMD ["node", "--max-http-header-size=20480", "index.js"]

# Node binary was not designed to run as PID=1, tini is a very small init system that is default for Docker

# It ensures that zombie processes are cleaned up and handles SIGTERM, SIGINT and SIGHUP signals.

ENTRYPOINT ["/bin/tini", "--"]Looking deeper at this ancient Docker spellbook, we can already identify some of the challenges that the sorceress faced:

Use of a Single Stage for Building and Dependencies: The dependencies and the build process are handled in the same stage. Separating these into different stages could improve the build efficiency and caching.

No Clear Separation of Concerns: The Dockerfile does not clearly separate the concerns of building, testing, and deploying, which can make maintenance and updates more challenging.

Lack of modularity: Missing important stages like development and testing, which could be useful in different situations.

The sorceress’s first encounter with Docker revealed many inefficiencies: an ancient Dockerfile with no clear structure, a single-stage build, and a lack of separation between development and production. As she digs deeper into this configuration, she realizes that the inefficiencies can be remedied. Soon, she will discover the power of multi-stage magic.

Chapter 2 - The Revelation of Multi-Stage Magic

In a moment of revelation, our sorceress discovers multi-stage Docker builds. A powerful spell that segments her tasks into development, testing, and production. Each stage, a step closer to efficiency and clarity.

Multi-Stage Magic (After Refactoring):

In Docker terms, multi-stage builds allow her to break down the build process into distinct, optimized stages, each serving a specific purpose.

# ======================================================================

# Base image

# ======================================================================

FROM 0123456789.dkr.ecr.eu-west-1.amazonaws.com/some-node-image AS base

WORKDIR /app

COPY package.json yarn.lock playwright.config.ts .npmrc tsconfig.prod.json tsconfig.json .prettierignore jest.config.js ./

COPY src ./src/

COPY assets ./assets/

COPY smoke-tests ./smoke-tests/

COPY __tests__ ./__tests__/

RUN yarn install --frozen-lockfile

# ======================================================================

# Development stage

# ======================================================================

FROM base AS dev

CMD ["yarn", "dev"]

# ======================================================================

# Unit tests stage

# ======================================================================

FROM base AS unit

COPY . .

RUN yarn lint &&\

yarn format:test &&\

yarn test:ci

# ======================================================================

# E2E stage

# ======================================================================

FROM mcr.microsoft.com/playwright:v1.47.0-jammy AS e2e

WORKDIR /app

COPY --from=base /app/ ./

RUN npx playwright install

RUN yarn test:smoke

# ======================================================================

# Build stage

# ======================================================================

FROM base AS build

ENV NODE_ENV=production

COPY . .

RUN yarn build

# Add prod dependencies into dist bundle

RUN yarn install --frozen-lockfile --production --modules-folder ./dist/node_modules

# ======================================================================

# Production stage

# ======================================================================

FROM 0123456789.dkr.ecr.eu-west-1.amazonaws.com/some-node-image

WORKDIR /app

ENV NODE_ENV=production

ENV PORT=3000

ENV ARCH=amd64

# For Mac M1, use following arch

# ENV ARCH=arm64

RUN curl -fsSLO "https://github.com/krallin/tini/releases/download/v0.19.0/tini-${ARCH}" \

&& ln -s /app/tini-${ARCH} /usr/local/bin/tini

RUN chmod +x "tini-${ARCH}"

COPY --from=build /app/dist ./

COPY --from=build /app/src/fragments ./src/fragments

COPY --from=build /app/assets ./assets

RUN rm -rf ./__tests__ ./smoke-tests ./playwright.config.ts

CMD ["node", "--max-http-header-size=20480", "src/index.js"]

# Node binary was not designed to run as PID=1, tini is a very small init system that is default for Docker

# It ensures that zombie processes are cleaned up and handles SIGTERM, SIGINT and SIGHUP signals.

ENTRYPOINT ["tini", "--"]Let’s reflect on the changes that the sorceress made to her Docker spellbook:

Multi-Stage Builds (development, unit tests, E2E tests, build, production): This approach allows for separation of concerns, making the Dockerfile more organized and efficient, each stage being tailored for specific needs.

Dedicated Testing Stages: Having separate stages for unit tests and E2E tests ensures that testing is an integral part of the build process.

Isolation of Development Environment: The dev stage isolates the development environment, which can be beneficial for development and debugging purposes without affecting the production build.

Improved Modularity: The Dockerfile is now modular, with each stage serving a specific purpose. This makes it easier to maintain and update the codebase.

Through the use of multi-stage builds, the sorceress has transformed her Dockerfile into a powerful tool, streamlining development, testing, and production. By isolating each stage, she has created a Docker environment that is more modular, efficient, and easier to maintain. Her refactoring journey highlights the power of multi-stage builds to optimize processes and improve performance, paving the way for even greater Docker mastery.

Now that her Dockerfile is streamlined, the sorceress seeks a way to orchestrate her Docker spells across different environments. This search leads her to Docker Compose.

Chapter 3 - Docker Compose Symphony

Our sorceress then encounters Docker Compose, a symphony of containers. In this analogy, Docker Compose acts as a conductor, coordinating each stage of her multi-stage build, enabling seamless transitions between development, testing, and production.

Let’s explore how Docker Compose complements the multi-stage Docker builds:

# this will target the dev stage in our Dockerfile

services:

web:

build:

context: .

target: devRunning docker-compose up, the sorceress conjures a development environment, where she can weave her magic with ease. The target: dev directive in the Docker Compose file allows her to focus only on the development stage, isolating her environment for optimal productivity. The value dev corresponds to the alias defined in the Dockerfile:

FROM base AS devMore than this, the sorceress can change the target value to either unit or e2e, running tests in isolation to thoroughly refine her spells before production. Docker Compose allows her to orchestrate containers for different environments—development, testing, and production—ensuring consistency across teams and enhancing collaboration. By isolating each stage, she makes her workflow more agile and efficient. Additionally, leveraging Docker’s caching mechanisms speeds up iterative development, enabling her to focus on refining her spells with greater efficiency. Docker Compose thus integrates seamlessly with multi-stage builds, offering a dynamic and scalable approach for both rapid development and thorough testing.

However, the sorceress knows that even the most powerful Docker spells need a mechanism to ensure they’re deployed efficiently. Enter Jenkins, her ally in continuous integration and deployment.

Chapter 4 - Jenkins Harmony with Targeted Builds

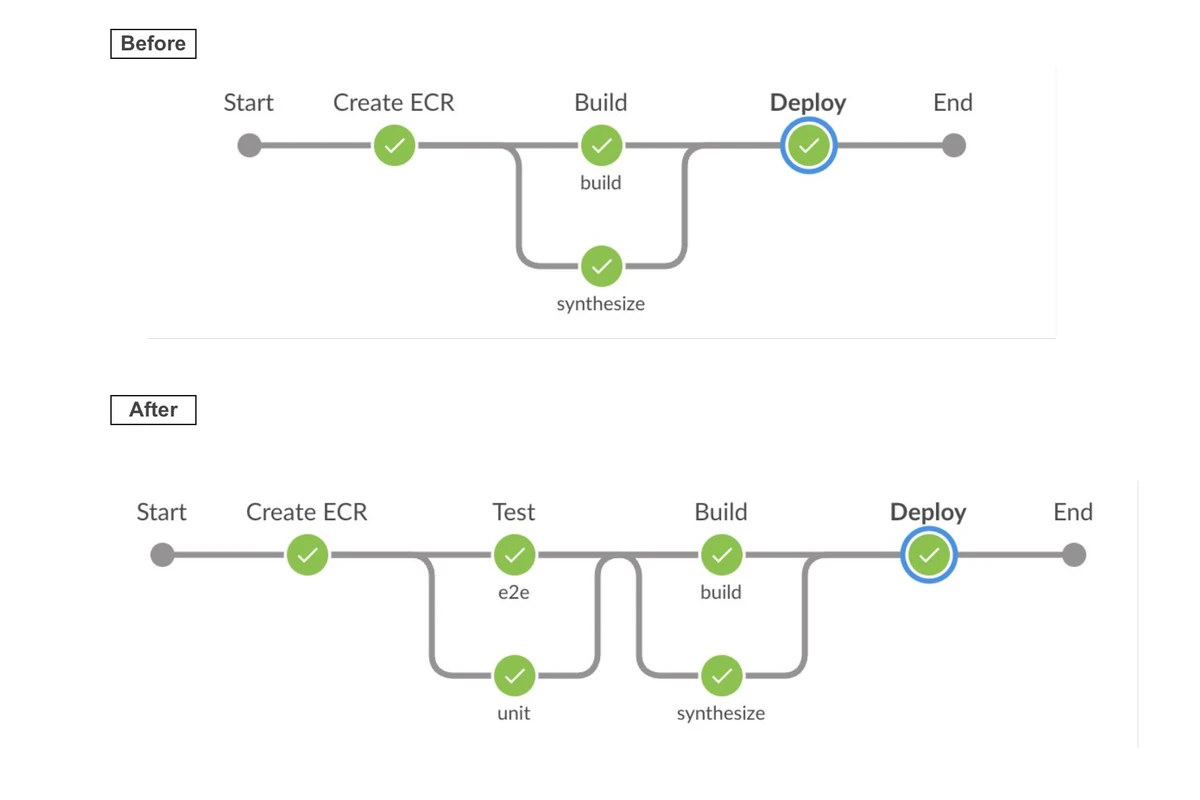

In our sorceress’ journey, Jenkins becomes an ally. Together, they harmonize CI/CD with targeted builds. Each Jenkins stage aligns with the Dockerfile, ensuring efficient resource usage and faster, smoother builds.

Let’s explore how Jenkins orchestrates the Docker builds. The sorceress defines parallel stages for unit and E2E tests before the build stage, like so:

stage('Test') {

parallel {

stage('unit') {

agent { node { label 'build-node20' } }

steps {

script {

sh 'scripts/unit.sh'

}

}

}

stage('e2e') {

agent { node { label 'build-node20' } }

steps {

script {

sh 'scripts/e2e.sh'

}

}

}

}

}The most relevant parts of the scripts unit.sh and e2e.sh are:

# Build container run only unit tests

docker build --target unit --pull -t "$SERVICE:$DOCKER_TAG-unit" .# Build container run only e2e tests

docker build --target e2e --pull -t "$SERVICE:$DOCKER_TAG-unit" .Here is a snapshot of the Jenkins pipeline before and after using targeted builds:

ℹ️ By running unit and E2E tests in parallel, Jenkins reduces the overall build time, ensuring faster feedback and quicker deployments. At the same time, the build stage becomes a single responsibility stage, focusing solely on building the production image.

In this scenarion, the Jenkins pipeline orchestrates the Docker builds, ensuring that each stage has a clear, well-defined role with a single resposibility, not doing more than it’s actually needed. This alignment between Jenkins and Docker stages streamlines the CI/CD process, making it more efficient and reliable.

With Jenkins in place, the sorceress finds even more benefits beyond just speed and efficiency. These revelations promise to enhance collaboration and maintainability.

Chapter 5 - Beyond the Magic: Additional Benefits

Our journey goes beyond mere speed and efficiency. The sorceress realizes the additional benefits of her multi-stage Docker spells - enhanced readability, easier maintenance, and improved collaboration across her team.

- Enhanced Readability: The Dockerfile is now more organized and easier to read, with each stage serving a specific purpose. This clarity makes it simpler for the sorceress and her team to understand and maintain the codebase.

- Separation of Concerns: The Dockerfile now clearly separates the concerns of building, testing, and deploying, making it easier to manage and update the codebase.

- Improved Collaboration: The modular structure of the Dockerfile encourages collaboration among team members. Each stage can be worked on independently, allowing for parallel development and testing.

- Potential Speed Improvements: The multi-stage Docker builds can improve build times by leveraging caching and reducing the size of the final image. This can lead to faster deployments and more efficient resource usage. Coupled with Jenkins, the sorceress could further optimize the CI/CD process, ensuring that each stage is executed in parallel, maximizing efficiency and speed.

Epilogue - The Sorceress’ Triumph

As our tale concludes, we see a kingdom transformed. The sorceress’s journey with Docker multi-stage builds has brought magic to the realm of software, revolutionizing the way builds are crafted and optimized. Through her mastery of Docker Compose and Jenkins, she has orchestrated a powerful symphony of efficiency, flexibility, and scalability. May her story inspire you to embark on your own adventures in optimization.

But her journey doesn’t end here. Armed with her newfound knowledge, the sorceress is prepared to tackle even greater challenges. From scaling microservices across vast infrastructures to integrating seamless CI/CD pipelines, she knows that the power of Docker will allow her to build, test, and deploy more efficiently in any kingdom she encounters.

And so, we bid her farewell as she sets out on her next quest, eager to refine her craft, uncover new spells, and further enhance her Docker magic. With the wisdom gained from her journey, she is ready to face whatever challenges lie ahead, confident in her mastery of Docker’s transformative capabilities.

In the next section we will explore practical applications and examples of Docker stages in real production scenarios.

2. Practical applications and examples

In this section we are going to demonstrate how Docker stages can be used in real production scenarios.

Basic setup

You can find all the examples in the github repository: https://github.com/KamilChoroba/understanding-docker-stages.git

Clone this repository and open it in a editor of your choice. We will use Visual-Studio-Code:

$ ~ git clone git@github.com:KamilChoroba/understanding-docker-stages.git

$ ~ cd understanding-docker-stages

understanding-docker-stages $ codeℹ️ the command code only works if you have Visual-Studio-Code installed and its executable via the command line.

Scenario 1, basic Dockerfile

In the first scenario we are going to create a simple base docker image, by using a public docker image (alpine:3.20.2) as the base. And creating a work directory /app for all follow up commands.

Goto scenario-01 inside of understanding-docker-stages/scenarios.

understanding-docker-stages $ cd scenarios/scenario-01Open the Dockerfile and read the content.

Build + Run this docker image locally and explore the content:

understanding-docker-stages/scenario-01 $ docker build -t docker-stages:1 .

understanding-docker-stages/scenario-01 $ docker run -it docker-stages:1 /bin/shℹ️ Commands explained:

docker buildwill execute the build process of docker. It will generate a docker images-t docker-stages:1is tagging the created docker image. If not provided, docker will choose a random hash-name. You can also specify the version with:1which can also be a name e.g.:main.specifies the context path, this should be optimally the location where your Dockerfile is locateddocker runwill run an docker image locally-it: This mode allows to interact with the running docker container as you would usually do via sshdocker-stages:1is the image + tag to run/bin/shis the command to execute on the docker image. This will allow us to run shell commands.

After running the docker image we can explore it a bit. You will notice, that you are immediately in the directory /app as it was set in the Dockerfile.

/app # pwd

/app

/app # ls -lah

total 8K

drwxr-xr-x 2 root root 4.0K Jul 23 06:14 .

drwxr-xr-x 1 root root 4.0K Aug 23 13:19 ..

/app #

/app # exitScenario 2, setup basic typescript project

In the second scenario we are going to setup an npm project in Docker. For this we want to create a new stage and keep the base untouched. We will install the npm package, copy all source files into the docker image and install all dependencies.

Goto scenario-02 inside of understanding-docker-stages/scenarios.

understanding-docker-stages/scenario-01 $ cd ../scenario-02Open the Dockerfile and check the content.

Build + Run this docker image locally and explore the content:

understanding-docker-stages/scenario-02 $ docker build -t docker-stages:2 .

understanding-docker-stages/scenario-02 $ docker run -it docker-stages:2 /bin/shAlso here, we can explore the content of the docker image a bit:

/app # ls -lah

total 56K

drwxr-xr-x 1 root root 4.0K Jul 23 06:17 .

drwxr-xr-x 1 root root 4.0K Sep 6 13:20 ..

drwxr-xr-x 47 root root 4.0K Jul 23 06:17 node_modules

-rw-r--r-- 1 root root 20.9K Jul 23 06:17 package-lock.json

-rw-r--r-- 1 root root 344 Jul 22 19:32 package.json

drwxr-xr-x 2 root root 4.0K Jul 23 06:15 src

-rw-r--r-- 1 root root 11.7K Jul 22 19:32 tsconfig.json

/app # exitScenario 3, build and test the project

In the next scenario we will run the service build command to generate the compiled project assets. Of course we could also execute other commands here like e.g. test.

Goto scenario-03 inside of understanding-docker-stages/scenarios.

understanding-docker-stages/scenario-02 $ cd ../scenario-03Open the Dockerfile and check the content.

Build + Run this docker image locally and explore the content:

understanding-docker-stages/scenario-03 $ docker build -t docker-stages:3 .

understanding-docker-stages/scenario-03 $ docker run -it docker-stages:3 /bin/shWhile exploring the docker image, you will notice that we now have the generated js file in the dist folder:

/app # ls -lah

total 60K

drwxr-xr-x 1 root root 4.0K Jul 23 06:18 .

drwxr-xr-x 1 root root 4.0K Sep 6 13:23 ..

drwxr-xr-x 2 root root 4.0K Jul 23 06:18 dist

drwxr-xr-x 47 root root 4.0K Jul 23 06:17 node_modules

-rw-r--r-- 1 root root 20.9K Jul 23 06:17 package-lock.json

-rw-r--r-- 1 root root 344 Jul 22 19:32 package.json

drwxr-xr-x 2 root root 4.0K Jul 23 06:15 src

-rw-r--r-- 1 root root 11.7K Jul 22 19:32 tsconfig.json

/app # cd dist

/app/dist # ls -lah

total 12K

drwxr-xr-x 2 root root 4.0K Jul 23 06:18 .

drwxr-xr-x 1 root root 4.0K Jul 23 06:18 ..

-rw-r--r-- 1 root root 44 Jul 23 06:18 index.js

/app/dist # exitScenario 4, create a production image and run the production application

Now we can create a clean production image from the previous executed stages. But first, we only want to install the production required npm dependencies. In a final stage we can then copy all required files and run the production service.

Goto scenario-04 inside of understanding-docker-stages/scenarios.

understanding-docker-stages/scenario-03 $ cd ../scenario-04Open the Dockerfile and check the content.

Build + Run this docker image locally and explore the content:

understanding-docker-stages/scenario-04 $ docker build -t docker-stages:4 .

understanding-docker-stages/scenario-04 $ docker run -it docker-stages:4This should print Hello, world! in on your CLI. That means, the index.ts was successfully compiled + executed in your docker image.

Explore the docker image a bit. You will notice, you are not by default in the /app directory, because we used a clean base image (node:21).

understanding-docker-stages/scenario-04 $ docker run -it docker-stages:4 /bin/sh

# ls -lah

total 64K

drwxr-xr-x 1 root root 4.0K Sep 6 13:37 .

drwxr-xr-x 1 root root 4.0K Sep 6 13:37 ..

-rwxr-xr-x 1 root root 0 Sep 6 13:37 .dockerenv

drwxr-xr-x 1 root root 4.0K Jul 22 19:40 app

lrwxrwxrwx 1 root root 7 May 13 00:00 bin -> usr/bin

drwxr-xr-x 2 root root 4.0K Jan 28 2024 boot

drwxr-xr-x 5 root root 360 Sep 6 13:37 dev

drwxr-xr-x 1 root root 4.0K Sep 6 13:37 etc

drwxr-xr-x 1 root root 4.0K May 14 09:25 home

lrwxrwxrwx 1 root root 7 May 13 00:00 lib -> usr/lib

drwxr-xr-x 2 root root 4.0K May 13 00:00 media

drwxr-xr-x 2 root root 4.0K May 13 00:00 mnt

drwxr-xr-x 1 root root 4.0K May 16 13:57 opt

dr-xr-xr-x 239 root root 0 Sep 6 13:37 proc

drwx------ 1 root root 4.0K May 16 13:57 root

drwxr-xr-x 1 root root 4.0K May 14 01:44 run

lrwxrwxrwx 1 root root 8 May 13 00:00 sbin -> usr/sbin

drwxr-xr-x 2 root root 4.0K May 13 00:00 srv

dr-xr-xr-x 11 root root 0 Sep 6 13:37 sys

drwxrwxrwt 1 root root 4.0K May 16 13:57 tmp

drwxr-xr-x 1 root root 4.0K May 13 00:00 usr

drwxr-xr-x 1 root root 4.0K May 13 00:00 var

# cd app/dist/

# ls -lah

total 20K

drwxr-xr-x 1 root root 4.0K Jul 22 19:57 .

drwxr-xr-x 1 root root 4.0K Jul 22 19:40 ..

-rw-r--r-- 1 root root 44 Jul 22 19:37 index.js

drwxr-xr-x 6 root root 4.0K Jul 22 19:56 node_modules

# exitScenario 5, intermediate step to run development environment via docker

Now lets add a development stage in between which allows us to make changes on the code-base and immediately see the changes in the docker container.

Goto scenario-05 inside of understanding-docker-stages/scenarios.

understanding-docker-stages/scenario-04 $ cd ../scenario-05Open the Dockerfile and check the content.

At this point we need to build and execute this docker image with a few more arguments:

understanding-docker-stages/scenario-05 $ docker build --target dev -t docker-stages:5 .

understanding-docker-stages/scenario-05 $ docker run -v ./src:/app/src docker-stages:5ℹ️ Commands explained:

--target devwill target a specific stage.devis defined as an alias.-v ./src:/app/srcwill map the local director./srcinto the docker container. This will allow changes on the local computer to directly be seen on the docker image

Apply changes to the scenario-05/src/index.ts file. You should see immediately the difference in the CLI.

understanding-docker-stages/scenario-05 $ docker run -it docker-stages:5 /bin/sh

> my-app@1.0.0 dev

> nodemon --exec ts-node src/index.ts

[nodemon] 3.1.4

[nodemon] to restart at any time, enter `rs`

[nodemon] watching path(s): *.*

[nodemon] watching extensions: ts,json

[nodemon] starting `ts-node src/index.ts`

Hello, world!!!

[nodemon] clean exit - waiting for changes before restart

[nodemon] restarting due to changes...

[nodemon] starting `ts-node src/index.ts`

Hello, world!!! I've changed the codebase

[nodemon] clean exit - waiting for changes before restartThat’s it for today. Happy coding!