There’s a time to develop your own tracking SDK

Vadim Shchenev · June 3, 2025 · 8 min read

At AutoScout24, we enjoy exploring the latest technologies, adopting modern approaches, and implementing complex technical solutions. As an Android engineer, you always have the opportunity to create something innovative that serves as a universal solution for various business cases and stands out as an intriguing technical idea. So, let’s imagine that you have three different endpoints to collect internal tracking metrics that cannot be handled by existing tracking solutions on the market due to specific business requirements. Interesting? Let’s go!

What could be defined as a tracking SDK

Usually (maybe at least for web), we have an endpoint that accepts a JSON object or array as input data that can be used to send some metrics. Everything that we need to do is handle these interactions, write some utility logic, and define some data transfer objects. But in the case of mobile applications there is one important thing that we should take into consideration if we do not want to lose data.

Users often use mobile applications while traveling. This could be an adventurous journey or a routine daily commute, such as using underground public transport, which often results in poor or interrupted internet connectivity. Since tracking metrics are critical for us, we need to ensure events are cached. Additionally, we should retry sending events when the internet connection becomes available again.

On the other hand we should not duplicate tracking events as it can be a reason to see unrealistic metrics. To cover this case we should decide a rule on when we can remove events from cache.

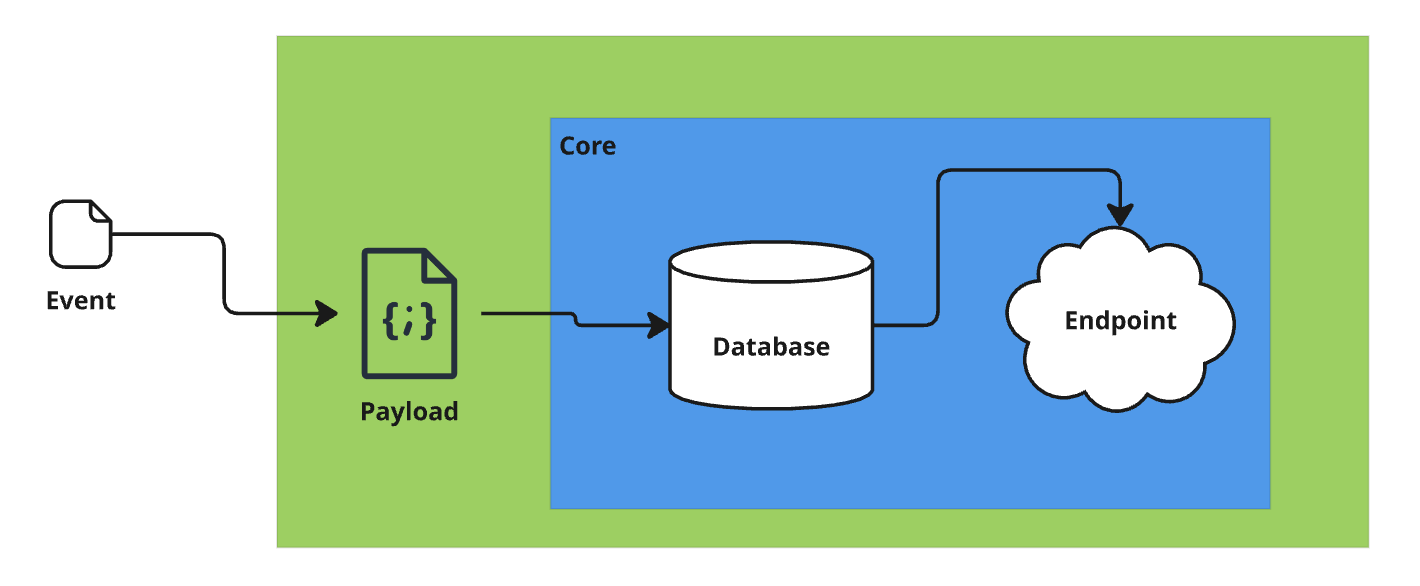

As a conclusion we can say that we have the following scenario:

- Step 1: Handle the moment when the event was triggered.

- Step 2: Save event in database.

- Step 3: Try to send event to the endpoint.

- Step 4: Remove events from the database if step 3 was successful.

Why should we remove events from the local cache only if step 3 was successful but not for example before it or maybe at the same moment as we can keep it in runtime memory? That’s very simple! We’re referring to mobile apps, where users might close or terminate the app immediately after an event is sent to the endpoint, while we’re still awaiting the response. If the response is unsuccessful, we can lose that event without any chance to recover it!

Business requirements

To have an understanding of business requirements we also should take into account some possible technical limitations that we might be faced with.

The first and very important example is the payload size: some SDKs have a maximum payload size per request. For instance, one popular tracking SDK has a maximum payload size of 8192 bytes per request. Imagine a company who wants to log their search results page. Each page result contains 20 listings. If the company logs a single event with an array of listings and every listing contains 50 properties, this will exceed the maximum allowed payload size.

The common solution people use in this scenario is to split the event into multiple requests, ensuring the payload size is not busted for Analytics. However, doing this requires to know the structure of the event in order to split it properly. Which means exposing the technical requirement of Analytics outside the TrackingSDK where we know what we want to send and how to split it.

Another important consideration is the limit on the number of requests per user: there is a maximum number of requests that can be made by users within a specific time frame. In the case of one popular tracking SDK, it’s 10 requests per second per user and 100 requests per 100 seconds per user.

Let’s go now to our particular story and let’s remember that we have more than one endpoint to take care of. So in a few words we have different services and different business cases.

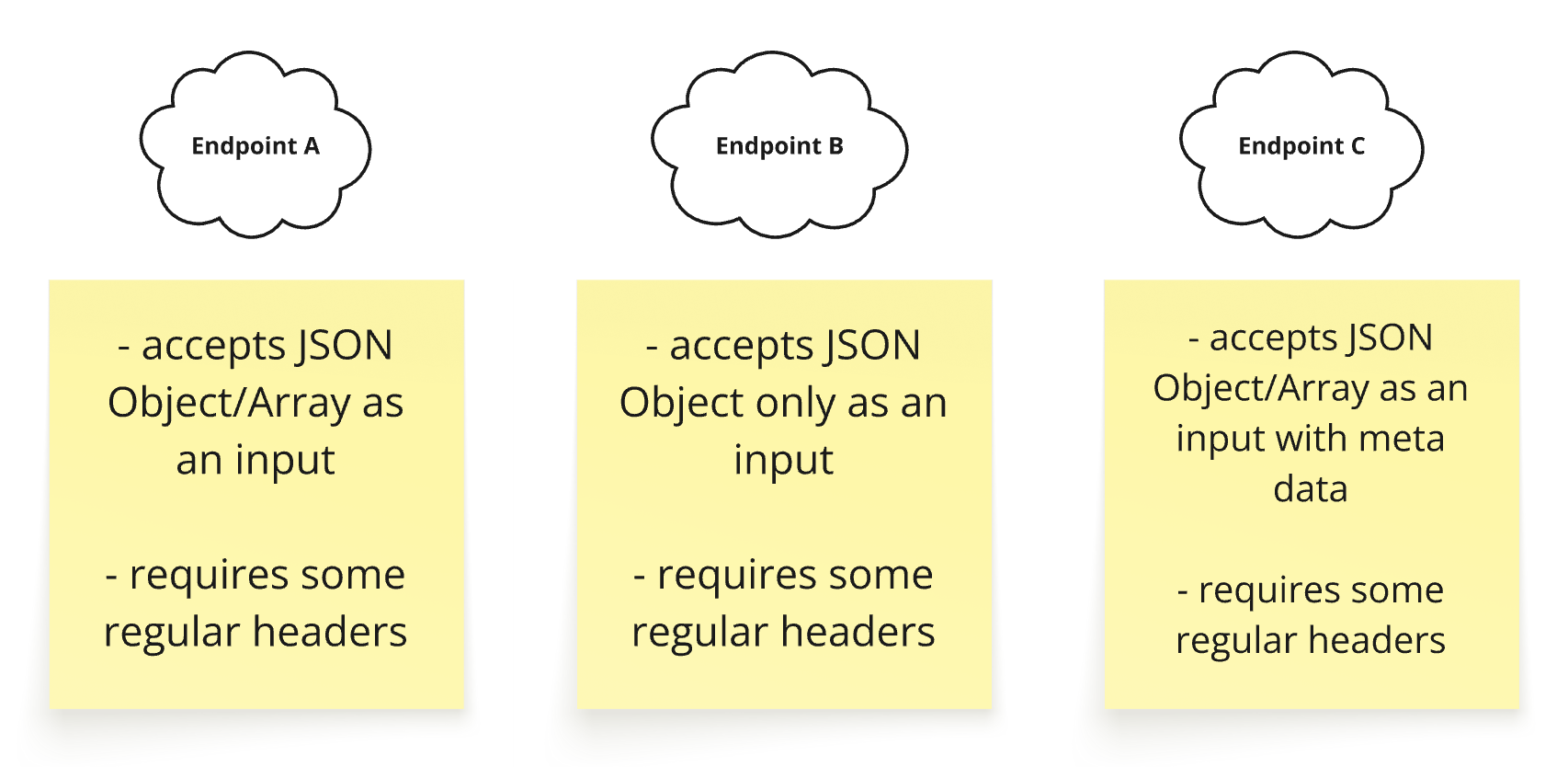

Let’s call those endpoints as endpoints A, B, C of course and try to understand the business requirements.

As we can see due to some technical limitations we can send only one event per request to endpoint B. But for endpoint A and C we should send just the data as is without any modifications. For endpoint C we should wrap our data but we can send multiple events per request.

Universal tracking SDK

Let’s think now about how we can implement an SDK that could be universal for every endpoint. First of all we should make a decision on what should be a part of universal logic and what should be isolated. As we have different endpoints A,B and C we probably should have different payload and secret keys to interact with the endpoint itself.

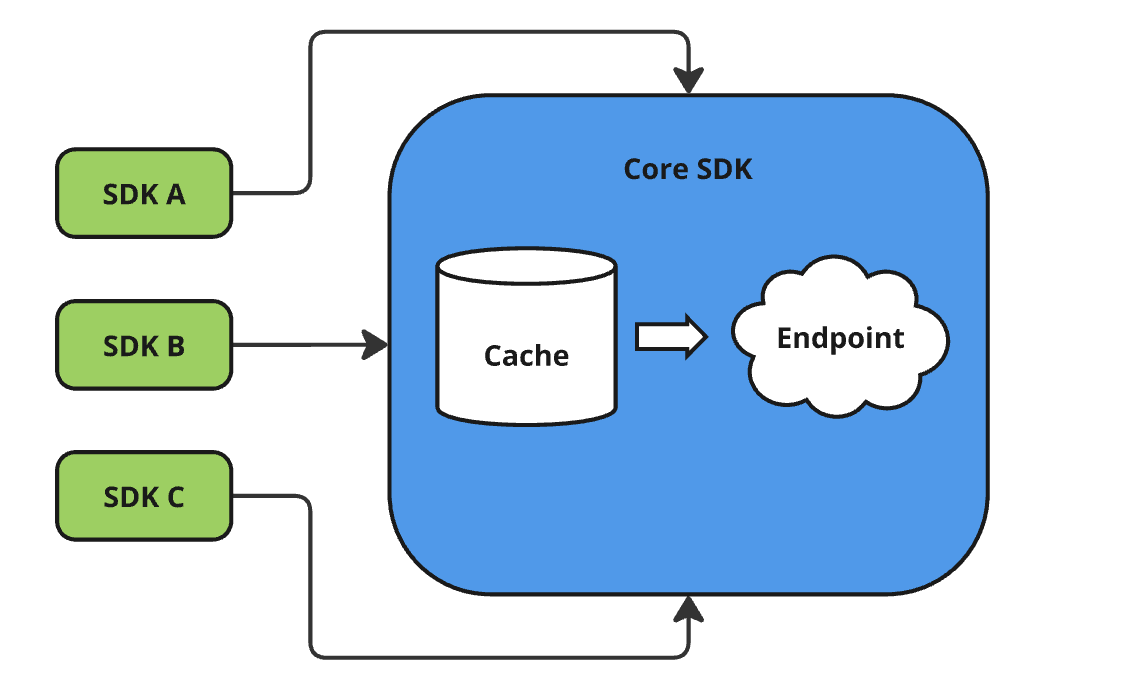

So first point: data transferring objects and SDK initialisation should be isolated and independent between SDK A, B and C (one single SDK for every single endpoint).

The logic on how to cache events and how to send them with retrying definitely should be reusable.

So the second point: we should also create a Core SDK and connect it as a dependency for every tracking SDK: A, B and C.

Let’s take a look at this schema to see our plan in perspective:

Implementation

We will not go deep into every detail, but will take a look at the main ideas:

- Since we are just looking for a successful response between 200…299, OkHttp will be sufficient for our use case.

- As our endpoints accept plain JSON data and we do not need to take care of response objects, in the core SDK we can use data stored as JSONObjects (string in database).

- We should not delegate to SDK consumers the definition of data (POJO) object for every tracking SDK. Every tracking data model should be included into a particular SDK codebase and used as a SDK dependency by SDK consumers.

We decided to use the following technologies:

- Room for database.

- OkHttp for networking.

- WorkManager for data sending and retrying.

The interaction with every SDK should be based on two main function calls: initialize SDK and track event. And for sure we also might need to update some values at runtime like users’ consent or/and authorization state.

SDK initialization involves setting up the parameters that could be used to generate headers and instantiate special delegate objects to create requests and add headers. Those objects implement one interface and we can use it in core SDK by injecting implementation of every delegate into core SDK (it is a main dependency for SDK A, B and C). Why do we need to define logic on how to build the request object? Take a look at the business requirement section and you will find that the request structure is different for every endpoint (array or object, only object and wrapper).

When we initialise SDK A, B and C we can also define on how we want to send events exactly by using strategy:

enum class Strategy {

SINGLE_EVENT_DELAYED,

SINGLE_EVENT_IMMEDIATELY,

BUNCH_OF_EVENTS_DELAYED,

BUNCH_OF_EVENTS_IMMEDIATELY

}When our SDKs are initialised and ready we can send events. Finally!

Adding all of them into the main project as dependencies from our internal Artefactory instance (we can use maven publish plugin for distribution) we now can use a function like

TrackerA.track(event: EventA)Model EventA belongs to the Tracking SDK_A , and every SDK (A,B,C) is independent of each other.

Tracking SDK A will serialise it into string and pass to the core SDK. Then based on the strategy we can send an event immediately or/and (for retry) schedule a work manager job in 5 minutes.

runCatching {

TrackingPojo(payload = event.payload).apply {

database.save(this)

workerManager.scheduleWorkIfNotScheduled(context, config)

if (config.strategy == Strategy.BUNCH_OF_EVENTS_IMMEDIATELY ||

config.strategy == Strategy.SINGLE_EVENT_IMMEDIATELY

) {

dataSender.postData(listOf(this), config)

}

}

}The data sender here is just an object containing OkHttp with injected request builder discussed above.

And of course we can define a policy for retry of our work manager:

setBackoffCriteria(BackoffPolicy.EXPONENTIAL,5,TimeUnit.MINUTES)The work manager also takes care of strategy and sends a bunch of events or one single event if the endpoint supports only this particular format.

Note, just a note but it is an important one. Based on our agreement with backend teams we record the timestamp on the moment when the event was handled by SDK and saved in the internal storage, but not at the moment when it is actually sent. It guarantees that the real order of events can be restored in case some of them were failing and sent with delay.

Conclusion

We released all three SDKs (plus one core SDK) a few months ago, and I’d say we haven’t encountered any critical issues. Everything works, and it’s been stable. Moreover, we decided to create a standalone github project for tracking SDKs without including them as modules into the main one. Why? Just easier to guarantee its stability and monitor changes and be sure if we have any kind of global updates or transformations of our main project the tracking SDKs will not be affected.

That’s it!